D-Lib Magazine

January/February 2013

Volume 19, Number 1/2

Table of Contents

Archiving and Recovering Database-driven Websites

Michael Rumianek

Universität Duisburg-Essen, Duisburg, Deutschland & Global Village GmbH, Voerde, Deutschland

michael.rumianek@uni-duisburg-essen.de

doi:10.1045/january2013-rumianek

Printer-friendly Version

Abstract

An ever increasing amount of information is provided by database-driven websites. Many of these are based on Content Management Systems (CMS). CMS typically separate the textual content from file content and store the textual content within a database while files are stored in a directory structure of a file system. For archiving and preservation of such websites, in many cases several tools are needed to archive the file data and the database data separately in different container formats. The database data may be especially difficult to archive since vendor specific implementations of datatypes constrict restoring the archive on different systems. The author developed and implemented a procedure that enables storing both file and database data in a single XML document based on an XML Schema, where the data in the database are mapped into a standardized form to facilitate recovery on different systems. The mapping of the complete content into only printable characters allows preservation of the archive in multiple ways. Setting up a highly automated cycle of archiving and restoring website content by using a Version Control System (VCS) is also suggested.

1 Introduction

Across the Internet, a large amount of digital information is available and provided to users by websites using a variety of techniques. Many current websites rely on static content, maybe extended by dynamic elements such as Flash elements or JavaScript. From a preservation perspective, websites using these techniques can be archived quite simply by using crawlers like HTTrack [10]. Using these tools, websites are downloaded as deeply as possible and prepared for use offline.

W3Techs discovered that other websites — about 30 percent of existing websites — are using Content Management Systems (CMS) to provide their content [14]. In most cases CMS based websites are database-driven since they store most elements, like textual content and partial formatting, within the database. Archiving CMS based websites proves much more difficult, because there may exist different views on the content depending on the browser and/or permissions of each user. Recovering a CMS based website from an archive generated by a crawler is not possible either, since the content archived by the crawler is affected by the crawler's permissions and configuration, and only a single view is archived. Therefore, the database itself is not recoverable. For institutions such as public organizations that have the obligation to, or would simply like to, preserve their websites as they are, a different format is required to archive database-driven or CMS based websites. The format must provide the functionality to recover the complete website in the original technique and view.

In this paper, a procedure is presented that overcomes the problems faced by archivists of database-driven websites. First, as a basis for understanding, current workflows needed to archive database-driven websites without using a crawler are explained. These explanations are followed by a discussion of the new approach of using existing standardized techniques, and combining them in a comprehensively usable procedure. A description of an implemented prototype tool shows the advantages of this procedure.

2 Background

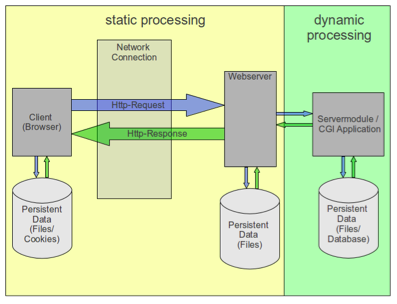

To find an acceptable process for archiving websites it is necessary to analyze the differences between the major classes of static and dynamic websites. Figure 1 gives an overview of the website content provided to a user's browser beginning with an HTTP Request by the browser and ending with the HTTP Response from the web server back to the browser. The process shown is valid for both static and dynamic web content.

Figure 1: Process of generating the displayed website in the browser of a client in the case of a database-driven website

2.1 Static websites

For a static website, the web server will load persistent data as a file and return it to the browser without processing it in any way. The display of the content is managed by the browser itself. There are two simple ways to archive static websites: by downloading a copy of the files using a crawler, or, by getting a copy of the necessary folders containing all of the files from the site's operator.

2.2 Dynamic websites

In contrast to static websites, dynamic websites are mostly database-driven. The static processing of an HTTP Request is extended by a dynamic process on the server side that modifies the static content or generates the whole content before returning it to the web server (see Figure 1). The modification/generation is done on the fly by querying information from a database and possibly linking to other resources. Database-driven websites typically consist of files of program code that generate the content, and binary resources such as images that are not stored in the database.The database contains all of the textual content of the pages, and also formatting information.

The main difference between static and dynamic websites is the fact that the content displayed depends on the data sent by the HTTP Request (e.g., user permissions, browser, localization and geolocation of the querying browser) and a portion of the content is generated on the fly. Due to the changing content, archiving of a dynamic website by using a crawler may lead to different, non-reproducible views. Archiving the website by obtaining a copy of all files is very difficult since the content stored in the database may be saved as files but may not be recoverable anymore. A way to avoid this problem is to transform all data into a file format that is independent from the underlying database system.

3 Current preservation approaches and solutions

The proper preservation of websites of public institutions has become an important subject because city councils offer more and more services on their websites. The lack of a closed process for archiving database-driven websites leads to recommendations for static archiving [1] even though it may result in some loss of information. In many cases the preservation of the database causes a problem [13], [3]. As a result, archiving of database-driven websites within a single archive file is currently not found in practice. Instead, database-driven websites are often archived in two separate archives: the file data and the database data. An example of this was introduced by DeepArc [2]. Their method of archiving of databases requires the provisioning of an XML Schema which fits the necessary database structures. Unfortunately, the development of this XML Schema requires a thorough understanding of the database content.

In many cases, using the native export functionality of the database system leads to binary or database vendor specific formats which are not restorable on systems that use a different database system. The use of database mapping tools ([5], [4]) implies the installation of software in the operating system of the hosting server and the availability of the tool for a long period of time.

The uncertainties described above and the lack of a simple format for archiving databases that is vendor independent and easy to use is a significant issue for long term preservation [1].

4 Requirements for preservation of database-driven websites

The preservation of a dynamic website definitely requires the ability to recover the archived content on a system that is different from the source system. This requirement for archiving once and restoring elsewhere poses a challenge due to vendor specific extensions or the limitations of many database systems.

For usability, the restoration process should be automated as far as possible. Therefore, all archived content has to be transformed into a generalized format which ideally is easy to use and understand and, of course, machine and human readable. In many cases the Extended Markup Language (XML) complies with these requirements. A very important property of XML is the ability to verify and validate the correctness and completeness of a document structure and recognize malformed content. By using an XML Schema the structure can be easily described. Thus, a procedure is needed to convert and protocol all content of dynamic websites into an XML based archive document.

5 Archiving Procedure

In general, the archiving of database-driven websites can be divided into two parts: archiving file data that are located within the directory structure of the website and archiving the database data. The sections have to be approached in different ways. Because the algorithms that would be used to obtain and prepare the information included in the archive document may depend on the implementation, the following section does not describe them in detail, but rather gives a general overview.

5.1 Defining an XML Schema

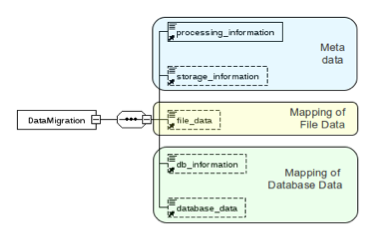

For archiving data by using an XML Schema — a structured definition for an archive document that deals with file data and database data in separate sections — an appropriate XML Schema was developed. In addition to the required sections for storage of the different types of data on the archived website, the storage of information about the source system at the time of archiving (system Metadata) may be useful. These Metadata may be helpful for discovering incompatibilities when it is necessary to recover the website after a long period of time. Figure 2 shows the three important sections of the XML Schema for the archiving process, which are:

- Metadata

- Mapping of File Data

- Mapping of Database Data

Since the procedure of archiving should ideally be usable on both static and database-driven websites, the sections containing the mapping of file and database data may, unlike the Metadata, be empty within the constructed archive document.

Figure 2: Overview of the XML Schema to be used for archive documents

5.1.1 Archive Metadata

The Metadata section is required and contains processing and storage information. The processing information consists of details regarding the source system, e. g., the underlying operating system and the localization of the system. This information is acquired at the beginning of the ingestion process and ensures that the most important properties of the host system are available, as they may be helpful for a recovery. The storage information contains the starting time of the ingestion. Since the section of Metadata is currently used for documentation purposes only, it may be enhanced by including data needed for archiving in digital libraries, such as a DOI [6].

5.1.2 Archiving File Data

It is necessary to preserve the filename and the relative path to the file in order to recover a website's archived file data. This information is stored as attributes of the file element located in the file_data element shown in Figure 2. The file data are stored within the file element. Files may consist of printable and non-printable characters, therefore, mapping into a transfer format is required. Mappings like these are currently in use at many points, e.g., email attachments. BASE64 encoding is used to accomplish the mapping. During the mapping process at ingestion, each file is encoded into BASE64 data and put into the archive document.

5.1.3 Archiving Database Data

Since database-driven websites typically use relational databases, the procedure described here focuses on this type of database even though the mapping of other types is generally possible.

Because relational databases may consist of several, possibly referenced tables, archiving the database is much more complex than archiving file data. In general, the communication with a database system is standardized by the SQL in ISO 9075 [7]. However, many vendors of database systems extend the standardized SQL. In order to avoid dealing with archive documents containing vendor specific database data structures, a mapping to standardized SQL elements is necessary. Once the ingestion of the database has begun, the analysis of the tables and any existing references they may have is performed first. As a result of these analyses the tables are ordered to enable a recovery from the archive. References in the database structure may require implementing additional steps during the recovery of the database data. Circular references [12], [8] for example, will cause such extra handling during recovery. A more detailed view of the handling of references within the database is outside the scope of this article, and the author restricts the description to the most important and basic facts.

During protocoling of the database data the mapping of non-standardized elements of SQL to standardized elements is performed. For easy maintenance of the required data type mapping, the implementation described here uses a configuration file in those instances where data type mapping may be configured.

As shown earlier in Figure 2, the mapping of databases consists of the elements db_information and database_data. The element db_information preserves basic vendor information from the database such as vendor name and version number. These data are preserved to keep the main properties of the database system at the time of the ingestion, but it is not necessary for recovering the database data.

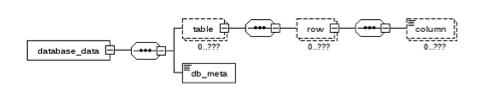

The section database_data contains the database data itself. These data are arranged in several elements as shown in Figure 3. The element db_meta provides information about the archived database (size, amount of tables and table rows) which can be used for checking after recovering. The archived database data are stored in multiple table elements which may consist of multiple row elements. Every row element may consist of multiple column elements. Since the content of the database is stored in the columns of a row, in the archive document the data are also stored in the column elements. Due to the fact that most databases support the storage of binary data like images within the columns, it is also necessary to map the data into a transfer format. As during processing file data, the column data are encoded to BASE64 format before being protocoled to the archive document.

Figure 3: Storage of database data

The result of processing file and database data is an archive document that meets the requirements defined in the XML Schema. Since all data, files and database content are mapped with the BASE64 algorithm, the archive document consists only of printable characters. Since the recover procedure involves processing an XML document, it can be done using various freely available XML Parsers. The XML Parser reads the archive document line by line and executes different tasks based on the XML Element with its attributes. While processing file data, the path to the file and its name are read followed by the encoded file content. The file content will be decoded and stored with the read filename at the read path.

During the processing of database data, tables and rows are processed as they are found in the archive document. Finding the 'Element-Start' of a table element would cause the creation of the required table with its columns and references. Embedded in the 'Element-Start' and 'Element-End' of the table elements containing the table are rows with the column data. The restore process of tables is done row by row.

5.2 Implementing the procedure

The storage of transformed content in a document, as required by the XML Schema, is the core of the procedure and is not limited to any specific hardware and software. An implementation of the procedure may be created in different programming languages. The first implementation of the procedure is done in Java which has the advantages of high portability and useful XML processing facilities. The resulting software is a small application that may be extended by drivers for different databases. With the aid of a Java Virtual Machine (JVM) it is executable on most source systems. This first implementation relies on the availability of a JVM on the source system, but later versions may abandon this requirement by enhancing the networking functionality. However, these changes will not affect the structure of the archive document since the XML Schema that is used will remain the same.

A simple configuration file allows users to configure the necessary properties of the website to be archived. A Graphical User Interface (GUI) is not provided by the application, but the current implementation works with a single command line command for archiving or recovering, in order to facilitate running the application on systems that do not have a GUI. This design also allows automated, scheduled archiving.

5.2.1 Testing

The current implementation can be described as a fully working, full-scale prototype that has passed several tests in different scenarios. These test scenarios included the migration of data from one database to another database from a different vendor, such as MySQL to PostgreSQL, SYBASE ASE to PostgreSQL or MySQL, and vice versa. Also, transferring data between databases from the same vendor was tested. The websites that were selected for the tests were varied, and included static, CMS based, and sites hosted by a Java Servlet Container such as Apache Tomcat. Implementation of the procedure in Java allows easy testing on different platforms. Tested platforms included Windows, Linux and a self built Openembedded OS (Kaeilos and Ångström) running on an Embedded System. The amount of data for archiving varied between several MB (on an Embedded System) and several million rows spread over nearly 200 tables where one of the tables contained about one million rows (SYBASE ASE) and where the latter leads to an archive document of several GB. Further tests were made with databases that are normally not in use for websites (MS Access and SQLite). These types of databases were successfully archived both by processing them as described above, and also by handling them as a file.

The test of archiving and restoring a CMS driven website consisting of around 2,800 files with approximately 60 MB, and a database of 100 tables containing about 15,000 rows, shows that it can be done in about 40 seconds. However, in general, the performance depends on the performance of the hardware. Further tests of the implementation were performed by archiving a directory structure containing several GB of file data, and archiving and recovering a database containing about 15 million datasets and a size of about 15 GB. Successfully passing these tests shows convincingly that the procedure will be usable for most websites. The maximum size of a single file limits the size of an archive document. Since most file systems, such as EXT4, NTFS, and XFS have a file limit ranging from several TB to EB, this limitation is not really a restriction.

Conventional archive formats that rely on binary archive formats like zip may become corrupt on every single erroneous Bit [11]. In contrast, tests performed by manipulating an existing archive document before restoring it show that the XML based archive only loses the affected element, which may be a file or content of a single column of a single row.

5.3 Proposed archive and restore cycle for websites

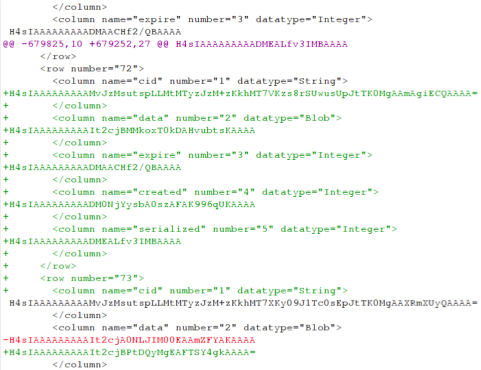

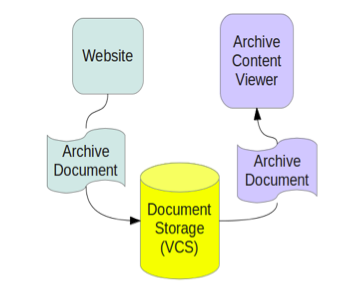

An archive document created using the procedure described above consists only of printable characters, which allows for a choice of archiving options, at least as hard copy. This format also enables using Version Control Systems (VCS) like GIT to store multiple archive documents of a given website, with the ability to track changes and easily recover historic versions. In Figure 4, tracking changes in a document is shown. Since VCS only store the differences found in the archive documents, every single snapshot put into the VCS will only consist of actual changes. The differences in Figure 4 show an added database row. The advantage of using a VCS to store the archive documents is that different snapshots of a website can be made available with a small set of commands by reducing the storage space since only differences in the snapshots are stored.

Figure 4: Tracking of database changes between different versions of an archive document by using a Version Control System

(red: removed content, green: added content, black: unchanged)

Figure 5: Archiving and providing previous versions by using VCS

The prototype implementing the procedure can be used for automated archiving and restoring. An automated cycle, from taking a snapshot to making different versions available, can be established. Figure 5 shows an example of an automated cycle in which scheduled snapshots of a website are taken and the archive documents that are generated are submitted to the VCS. Historic versions of the website may be shown in an Archive Content Viewer that obtains a requested version of the website as an archive document via VCS. A virtual machine may also work as an Archive Content Viewer. Switching between historic versions of a website means switching to a different version of the archive document and recovering it.

An automated cycle of automated archiving and restoring was established several times during testing of the prototype. To make the necessary setup steps easy, checklists for the tasks of setting up an archiving environment for manually executed archiving and automated archiving, and restoring to an Archive Viewer, were developed. Documentation for the prototype implementation, as well as the checklists and other setup tools and examples, is being developed.1

6 Conclusion

The procedure described in this paper facilitates the archiving of websites in a closed process combining file data with database data in a single XML document. In contrast to other tools, for example DeepArc, the XML Schema does not depend on the website that is to be archived [9]. Since the database of the archived website is completely included in the resulting document, recovering the website will restore the website to what it was at the moment of ingestion.

The current implementation of the procedure may be run on a system without the installation of software by the system administrators, and without the need to be familiar with the server system. Mapping vendor specific modifications of functionality, e.g., data types to ISO Standard, will allow users to recover the archive on a different system than the source system, including a database from a different vendor.

Converting data content of files and databases into a standardized format containing only printable characters enables the use of a Version Control System. The ability of a VCS to store only differences, storing multiple archived stages of a website requires reduced storage space. Since archiving and recovering of a website involves only a few commands, a highly automated process chain may be setup.

7 Outlook

To improve usability for non-specialist users, future development should include providing an optional GUI for archiving and restoring without needing to enter commands on the command line. The addition of a toolbox for setting up, using and maintaining an archiving chain, including archiving in a VCS and an Archive Content Viewer, would also be a valuable enhancement.

Notes

1 The tools and documents for the prototype may be obtained directly from the author.

References

[1] BKK: Empfehlung zur Speicherung von kommunalen Webseiten — Teil 2: Technik, 2011.

[2] Aubery, S.; Hafri, Y.: DeepArc, 2005.

[3] Brown, A.: Archiving Websites: A Practical Guide for Information Management Professionals, Facet Publishing, London 2006, ISBN: 978-1856045537.

[4] Copyright DRPU Software Pvt. Ltd.: Best Database Conversion Solution, 2010.

[5] EasyForm Inc.: ESF Database Migration Toolkit, 2010.

[6] International DOI Foundation: DOI Handbook, 2012.

[7] ISO/IEC: Information technology — Database languages — SQL — Part 1: Framework (SQL/Framework), 2011.

[8] Ouzounov V.: Conception and Prototypical Implementation of a Tool for Mapping and Reconstruction of Database Data Utilizing a Cross-Platform Exchange Format, Universität Duisburg-Essen, diploma thesis, 2006.

[9] Rouges, S.; Mensch, A.; Aubry, S.: DeepArc Users Guide, 2004.

[10] Roche, X.: HTTrack Website Copier, 2011,

[11] Roelofs, G.; e.a.: Archive Limits and Known Vulnerabilities, 2008.

[12] Sunderraman R.: Fundamentals of Database Systems: Laboratory Manual, 2006, Georgia State University.

[13] Pinsent, E.; e.a.: The Preservation of Web Resources Handbook, 2008.

[14] Q-Success: Usage of content management systems for websites, 2012.

About the Author

|

Michael Rumianek holds the degree of a Dipl.-Ing. (diploma engineer) which is similar to a Master's degree. He is a Senior Software Developer for an Internet Service Provider (Global Village GmbH) and works on archiving and transferring websites, and other projects, with CORE Internet Council of Registrars. Since 1999 his academic work as a scientist at the Institute of Computer Science of the University of Duisburg-Essen in Germany has included research projects as well as lecturing. From 2001 to 2010 he lectured courses on 'Internet Technologies and Web-engineering'.

|

|