|

D-Lib Magazine

January/February 2014

Volume 20, Number 1/2

Table of Contents

Big Humanities Data Workshop at IEEE Big Data 2013

Tobias Blanke

Göttingen Centre for Digital Humanities

Department of Digital Humanities, Kings College London

tobias.blanke@kcl.ac.uk

Mark Hedges

King's College London

mark.hedges@kcl.ac.uk

Richard Marciano

University of North Carolina at Chapel Hill

richard_marciano@unc.edu

doi:10.1045/january2014-blanke

Printer-friendly Version

Abstract

The "Big Humanities Data" workshop took place on October 8, 2013 at the 2013 IEEE International Conference on Big Data (IEEE BigData 2013), in Santa Clara, California. This was a day-long workshop featuring 17 papers and a closing panel on the future of big data in the humanities. A diverse community of humanists and technologists, spanning academia, research centers, supercomputer centers, corporations, citizen groups, and cultural institutes gathered around the theme of "big data" in the humanities, arts and culture, and the challenges and possibilities that such increased scale brings for scholarship in these areas. The use of computational methods in the humanities is growing rapidly, driven both by the increasing quantities of born-digital primary sources (such as emails, social media) and by the large-scale digitisation of libraries and archival material, and this has resulted in a range of interesting applications and case studies.

Introduction

Big Data is an increasingly ubiquitous term used to describe data sets whose large size precludes their being curated or processed by commonly-available tools within a tolerable timespan. What constitutes "big" is of course a moving target as hardware and software technologies evolve. Moreover, "big data" is not just a matter of sheer volume as measured in terabytes or petabytes; other characteristics of data can affect the complexity of its curation and processing and lead to analogous requirements for new methods and tools for effective analysis, an idea that is captured by Gartner's "3Vs" definition of big data as "high volume, high velocity, and/or high variety information assets" (Gartner, 2012).

Thus relatively small, but complex, data sets can constitute "big data", as can aggregations of large quantitative of data sets that are individually small. Equally, while much big data work involves the use of various analytical techniques to identify relationships and patterns within large aggregations of data, and make predictions or other inferences based on these datasets, it equally falls under the umbrella of big data to identify the — perhaps very small — pieces of evidence relevant to a research question within a much larger body of information.

According to its proponents, Big Data has the potential for transforming not only academic research but also healthcare, business, and society. The 2013 IEEE International Conference on Big Data (IEEE BigData 2013), which took place 6 - 9 October 2013 in Santa Clara, California, USA, was the first in a series of annual conferences that aim to provide a showcase for disseminating and a forum for discussing the latest research in Big Data and its applications. The conference included sessions on theoretical and foundational issues, standards, infrastructure and software environments, big data management and curation, methods for search, analysis and visualisation, security and privacy issues, as well as applications in a variety of fields both academic and industrial.

The conference also included a range of workshops with in-depth discussion addressing a range of specific topics. In particular, the workshop on Big Data and the Humanities addressed applications of "big data" in the humanities, arts and culture, and the challenges and possibilities that such increased scale brings for scholarship in these areas. The use of computational methods in the humanities is growing rapidly, driven both by the increasing quantities of born-digital primary sources (such as emails, social media) and by the large-scale digitisation of libraries and archival material, and this has resulted in a range of interesting applications and case studies.

At the same time, the interpretative issues around the use of such "hard" computational methods for answering subjective questions in the humanities, highlight a number of questions and concerns that have been raised regarding the uses of "big data" approaches in general.

Program and Attendance

The event was a day-long workshop featuring a total of 17 papers (8 long papers, 3 Digging Into Data Challenge project papers, and 6 short papers) and a closing panel on the future of big data in the humanities, which involved workshop participants and funding agency representatives from the US National Endowment for the Humanities (NEH) and the UK Arts & Humanities Research Council (AHRC). The organizers were regrettably only able to accommodate a third of the paper submissions as the selection was very competitive. Details on the sessions including slides and papers and the full list of authors and co-authors are available from the workshop program.

While held in California, the workshop achieved a remarkable balance of international speakers from North America and Europe. Of the 17 talks delivered, 9 were by speakers from the US, 5 from the UK, 1 from France, and 2 from Canada. The speakers themselves represented broad diversity in their affiliations between humanists (English, Archaeology, Digital Humanities, History, Film Studies, Philosophy, Libraries, Archives) and technologists (Information Science, Media Studies, Computer Science). A closer look at the papers' co-authors reveals widespread collaboration between these two groups with participant backgrounds spanning academia, research centers, supercomputer centers, corporations, citizen groups, and cultural institutes. This was one of the most well-attended workshops at the IEEE BigData 2013 conference with some 60 participants, attesting to the interest and vitality of this growing field of big humanities data.

Long Papers

Finding and clustering reprinted passages in nineteenth-century newspapers, presented by David Smith from Northeastern University, USA.

Algorithms are developed for detecting clusters of reused passages in OCR-ed old newspapers. Geographic and network analysis are performed.

Classifying humanities collections by genre, presented by Ted Underwood from the University of Illinois, Urbana-Champaign, USA.

This work focuses on mining large digital libraries and dividing them by genre, taking into account time-varying genres and structurally heterogeneous volumes.

Analyzing and visualizing public sentiment regarding the royal birth of 2013 using the UK Twitter, presented by Blesson Varghese from the University of St. Andrews, UK.

A framework for the analysis and visualization of public sentiment is developed. Machine-learning and dictionary-based approaches are contrasted.

A prototype system for the extraction and analysis of the visual features of digitized printed texts, presented by Neal Audenaert from Texas A&M and Natalie Houston from the University of Houston, USA.

This work describes VisualPage, a prototype system for the extraction and analysis of the visual features of digitized printed texts, such as page layout.

Use of entity resolution for processing large collections, presented by Maria Esteva from the University of Texas at Austin, USA.

Techniques are proposed to assist curators in making data management decisions for organizing and improving the quality of large unstructured humanities data collections.

[Image from Richard Marciano's iPhone: Maria Esteva, U. of Texas at Austin (TACC), USA.]

Developing a generic workspace for big data processing in the humanities, presented by Benedikt von St. Veith from the Juelich Supercomputing Centre, Germany, USA.

Cyberinfrastructure for the humanities is proposed where Hadoop and Pig are merged with data grid solutions based on iRODS.

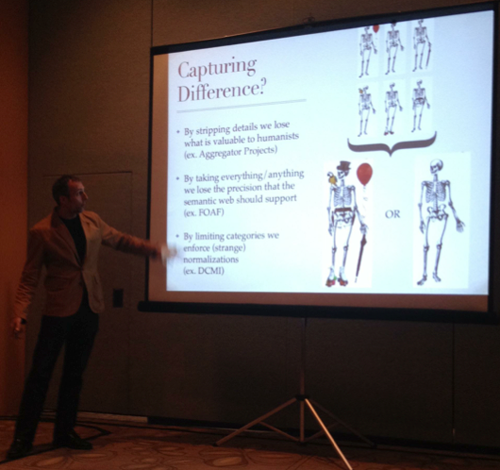

The curious identity of Michael Field and the semantic web, presented by John Simpson, University of Alberta, Canada.

A working example is proposed where semantic web ontologies reveal a lack of nuances in dealing with the complex relationships between names and people.

[Image from Richard Marciano's iPhone: John Simpson, U. of Alberta, Canada.]

The Google Cultural Institute Platform, presented by Mark Yoshitake from the Google Cultural Institute, France.

A large-scale system for ingesting, archiving, organizing, and interacting with digital cultural assets is discussed.

Digging into Data Challenge Papers

Visualizing news reporting on the 1918 influenza pandemic, presented by Kathleen Kerr from Virginia Tech, USA.

The vaccination discourses of 1918-1919 are studied and big data methodological understanding of data mining algorithms is conducted.

Building a visual analytical tool for processing human rights audio-video interviews, presented by Lu Xiao and Yan Luo from The University of Western Ontario, Canada.

The Clock-based Keyphrase Map (CKM) prototype is proposed. This tool combines text mining and information visualization techniques using time-series data.

Processing human rights violation reports, presented by Ben Miller from Georgia State University, USA.

A framework to process human rights violation reports is proposed in which narratives that traverse document boundaries are generated.

Short Papers

Bibliographic records generated by libraries as humanities big data, presented by Andrew Prescott, from King's College London, UK.

This paper examines how bibliographic records generated by libraries represent a homogeneous form of humanities big data akin to certain type of scientific content.

Experimenting with NoSQL technologies for Holocaust research, presented by Tobias Blanke, King's College London, UK.

Graph databases are considered for the modeling of the heterogeneous data found in Holocaust research.

Geoparsing and georeferencing of Lake District historical texts, presented by Paul Rayson from Lancaster University, UK.

Identifying place names in historical texts is pursued. Tools are developed to visualize these names on maps.

Crowdsourcing of urban renewal data, presented by Richard Marciano, UNC Chapel Hill, USA.

An open-source collaborative mapping environment prototype is being developed to support "citizen-led crowdsourcing" using archival content.

Big data myths, challenges, and lessons, presented by Amalia Levi, University of Maryland, USA.

A number of myths of humanities scholarship are proposed. Transnational humanities research is discussed and resulting challenges of big data humanities research.

Emotion extraction from 20th century English books, presented by Alberto Acerbi, University of Bristol, UK.

Three independent emotion detection tools are applied to an 8 million corpus of digitized books available in the Google Books Ngram corpus.

Closing Panel

The workshop ended with a panel bringing together some of the presenters with representatives from funding bodies and leaders of research initiatives. The panel addressed some of the broader questions that big data poses for the humanities and related disciplines. First, there is the question of what humanities scholars will make of born-digital culture and the new material for humanities research that it provides. The panel gave a good indication of future challenges created by this born-digital culture; interestingly, the panel seemed less concerned by the volume and velocity of this data and more about questions of data quality and provenance. Data summarisation technologies and visualisations may generate the impression that the quality of individual data items is no longer as important; humanities scholars, however, are often interested in minute differences and in drilling down into data sets.

[From Richard Marciano's iPhone (left to right): Tobias Blanke, King's College London, UK; Christie Walker, Manager from AHRC's "History, Thought and Systems of Belief" theme, UK; William Seales, Google Cultural Institute, U. of Wisconsin-Madison, USA; Ted Underwood, U. of Illinois, Urbana-Champaign, USA;

Barry Smith, AHRC Theme Fellow for the "Science and Culture" theme, UK; Brett Bobley, NEH Office of Digital Humanities, USA; (empty chair due to US government shutdown); Andrew Prescott, AHRC Theme Fellow for the "Digital Transformations" theme, UK.]

There are also research questions for the humanities that go beyond simply how to use big data in humanities research, for example questions about what big data means for the human condition. Anderson's quest for an 'end of theory' comes to mind here. The claim about the 'end of theory' was meant as a provocation by Anderson; however, it became quickly adopted across disciplines, and it would be valuable to investigate further, together with doctoral students, the extent and implications of these changes (Anderson, 2008). Rather than spelling the 'end of theory', much of the disruptive power of big data for traditional fields of enquiry does not stem from giving up on theory altogether but from exploring new ways of looking at existing problems, and from using new theories.

As well as these epistemological questions, there are fundamental practical questions raised by big data about how humans want to live together. The first one is related to those who are excluded from the wonderful digital world of big data. Who are the "big data poor" (Boyd & Crawford, 2012), who are not represented in current streams of big data because they are not regarded as interesting to the big datafication efforts?

The second practical question raised by the panel was related to Edward Snowden, who disclosed National Security Agency Internet surveillance programs in 2013. How can we ensure that we can be forgotten in the world of big data at least if we want to? How can we measure what big data algorithms do to us and how they represent us? Governments are big data organisations themselves, and trust in them as honest brokers of big data has been damaged. Government agencies are among the largest big data collectors of all. Indeed, they seem to be involved in collecting for the sake of collecting, otherwise some of their activities cannot be explained, as it is doubtful that some of the collected data, as described by Snowden, can actually lead to any meaningful analysis.

Looking Forward

The organisers do not intend this workshop to be a one-off event, but regard it rather as both the first in a series of workshops and as part of a broader cluster of activities around big data and the humanities. Plans are already underway for a follow-on workshop at IEEE BigData 2014, which will be held in Washington DC in October 2014. The workshop organisers have also set up the Big Humanities Data space, a site that they plan to use for providing updates on a number of shared workshops, projects, and awards that explore the development of algorithms and infrastructure for big data for the humanities.

This summary only scratches the surface of the presentations and of the discussions that followed; full papers from the workshop are available in the proceedings of the parent conference in IEEE Xplore. The next workshop will be announced via the Big Humanities website in the coming months, and we encourage interested parties to consider submitting a paper on their research, as well as to contact us with information about other activities, to help us in building up an international community that brings the insights and interests of humanists to the world of big data.

References

[1] Laney, Douglas. (2012) "The Importance of 'Big Data': A Definition". Gartner, Inc..

[2] Anderson, Chris. (2008) "The End of Theory: The Data Deluge Makes the Scientific Method Obsolete". Wired Magazine, 16:07.

[3] boyd, danah m., & Crawford, Kate. (2012). Critical Questions for Big Data: Provocations for a Cultural, Technological, and Scholarly Platform. Information, Communication and Society, 15(5), 662-579. http://doi.org/10.1080/1369118X.2012.678878

About the Authors

|

Tobias Blanke is the director of the MA in Digital Asset and Media Management. His academic background is in philosophy and computer science, with a PhD from the Free University of Berlin on the concept of evil in German philosophy and a PhD from the University of Glasgow in Computing Science on the theoretical evaluation of XML retrieval using Situation Theory. Tobias has authored numerous papers and 3 books in a range of fields on the intersection of humanities research and computer science. His work has won several prizes at major international conferences including best paper awards. Since 2012, he has been a Visiting Professor at the Göttingen Centre for Digital Humanities.

|

|

Mark Hedges is Director of the Centre for e-Research, within the Department of Digital Humanities at King's College London. His original academic background was in mathematics and philosophy, and he gained a PhD in mathematics at University College London, before starting a 17-year career in the software industry. Dr. Hedges now works on a variety of projects related to digital libraries and archives, research infrastructures, and digital preservation, as well as teaching on MA programs at King's, and directing the MA program in Digital Curation.

|

|

Richard Marciano is a professor at the School of Information and Library Science at the University of North Carolina at Chapel Hill (UNC) and directs the Sustainable Archives & Leveraging Technologies (SALT) Lab. He is collaborating on a number of "big data" and digital humanities projects. He holds a BS in Avionics and Electrical Engineering, and an M.S. and Ph.D. in Computer Science, and has worked as a Postdoc in Computational Geography. He conducted interdisciplinary research at the San Diego Supercomputer Center at UC San Diego for over a decade, working with teams of scholars in sciences, social sciences, and humanities.

|

|